AI Prompt Optimization: How Politeness Is Costing OpenAI Millions

-

Admin

Admin - 20 Apr, 2025

AI Prompt Optimization: How Polite Prompts Are Costing OpenAI Millions

Artificial Intelligence has rapidly entered our daily lives — from smart assistants to coding tools, customer service bots, and creative content generators. But what most users don’t realize is that the way we talk to AI matters more than ever — not just in terms of output, but in terms of cost.

Recently, OpenAI CEO Sam Altman made headlines by revealing a surprising insight: saying “please” and “thank you” to ChatGPT is costing them millions. Yes, being polite to an AI might feel like good manners, but it actually increases operational expenses significantly.

Let’s explore how this happens and how you can apply AI prompt optimization to reduce costs, improve performance, and even contribute to a more sustainable AI ecosystem.

What Is AI Prompt Optimization?

AI prompt optimization is the process of crafting precise, efficient, and token-smart inputs for language models like ChatGPT. It focuses on getting better results with fewer words, minimizing unnecessary tokens while maintaining clarity.

In tools like ChatGPT, every word, punctuation mark, and space gets converted into “tokens.” These tokens are the atomic units of language models — and the more tokens you use, the higher the cost.

Poorly written prompts tend to:

- Waste tokens with redundant or polite language

- Add confusion by being vague or overly complex

- Require the model to guess the intent

On the other hand, optimized prompts:

- Deliver clear instructions in fewer words

- Guide the AI effectively toward the intended outcome

- Reduce computational load and cost

In essence, AI prompt optimization is like writing with purpose — it’s prompt engineering for efficiency.

Sam Altman’s Revelation: Why Politeness Is Expensive

In an interview covered by Livemint, Sam Altman revealed that many ChatGPT users treat the model with human politeness. They say things like:

“Hi ChatGPT, hope you’re doing well. Can you please help me with a quick summary of this article? Thanks!”

This seems harmless — even kind. But for an AI, every extra word counts. In fact, that single prompt could result in 30–40 tokens, even though only 5–10 tokens are actually needed to perform the task.

Multiply this by millions of users daily, and the overhead becomes enormous. According to Altman, this politeness-based padding in prompts costs OpenAI millions of dollars annually in compute resources.

While these extra tokens don’t directly improve the response quality, they still get processed and priced. That’s the hidden cost of not optimizing prompts.

How Tokens Work in AI Models Like ChatGPT

To understand the cost of politeness, we need to understand tokens.

A token is a chunk of text — usually 3–4 characters or a single word. For instance:

- “Please” = 1 token

- “Summarize this article” = 3 tokens

- “Hey, how are you doing today?” = 8 tokens

OpenAI charges based on the number of tokens processed in both input and output. For GPT-4, for example, prices can range from $0.03 to $0.06 per 1,000 tokens depending on the model variant.

Now imagine you have a company using ChatGPT for automated email replies. If each prompt has an extra 15 tokens due to fluff or polite filler, and you’re sending 100,000 messages a month, that’s 1.5 million unnecessary tokens — which can translate to thousands of dollars lost.

So yes, trimming the fat matters.

How to Optimize AI Prompts (Best Practices)

Let’s look at practical ways to improve prompt structure for performance, cost, and clarity.

🔧 1. Be Direct and Specific

Instead of:

“Hi ChatGPT, could you please help me understand this concept a bit better?”

Use:

“Explain the concept of gravitational time dilation.”

🧹 2. Remove Filler and Politeness

“Thank you,” “please,” “if you don’t mind” — these are great in human communication, but unnecessary in AI.

Example:

- Unoptimized: “Can you please summarize this paragraph for me? Thanks a lot!”

- Optimized: “Summarize this paragraph.”

🎯 3. Use Context Wisely

Don’t repeat information the model already knows from earlier turns in a conversation. Keep prompts lean.

🧠 4. Break Complex Prompts into Steps

Long-winded prompts confuse models. Break them down into separate commands or clearly structured instructions.

📊 Prompt Optimization Example: Before vs After

| Prompt Version | Tokens Used | Purpose |

|---|---|---|

| “Hi, could you please tell me what is quantum entanglement in simple terms? Thanks!” | ~21 tokens | Polite but verbose |

| “Explain quantum entanglement simply.” | ~5 tokens | Optimized, direct |

Result: Both give similar outputs, but the optimized one saves ~75% of the tokens.

Prompt Optimization Use Cases

Whether you’re building an AI-powered chatbot or using ChatGPT for personal tasks, optimizing prompts brings measurable value.

🧑💻 Developers

- Reduce API costs on GPT-based tools

- Improve response consistency

- Minimize latency

🧠 Researchers & Students

- Get precise answers faster

- Avoid noisy outputs from vague queries

💼 Businesses

- Optimize chatbot performance

- Save money at scale

✍️ Content Creators

- Generate content ideas faster

- Reduce need for post-editing

Also read : AI Companions: Exploring the Rise

Environmental Impact of Poor Prompt Design

It’s not just about cost — it’s about carbon.

Every token processed requires electricity, and on a large scale, it contributes to the AI carbon footprint. Larger prompts consume more compute time, more memory, and more cooling power in data centers.

By applying AI prompt optimization, we’re not only saving money — we’re making AI usage more eco-conscious. The greener the prompt, the smaller the footprint.

Tools & Tips to Help You Optimize Prompts

Here are some tools and methods to improve your prompt-writing workflow:

🛠 Tools:

- OpenAI Tokenizer: See how many tokens your prompt uses.

- PromptLayer: Analyze and version your prompts.

- LangChain Prompt Templates: For structured, clean prompts in apps.

🧠 Tips:

- Test prompts with different models

- Keep prompts under 100 tokens if possible

- Use examples in the prompt only if essential

Conclusion

AI prompt optimization is no longer a nice-to-have — it’s a necessity. Whether you’re building apps with OpenAI’s API or just chatting with ChatGPT for fun, your prompt structure affects:

- 💸 Cost

- ⚡ Performance

- 🌍 Sustainability

With leaders like Sam Altman highlighting how something as simple as “thank you” can cost millions, it’s time to rethink how we interact with AI.

So next time you talk to ChatGPT, keep it short, smart, and sharp.

❓ FAQs

What is AI prompt optimization?

AI prompt optimization is the process of refining inputs to AI models to reduce token usage, cost, and improve output clarity.

Does saying “please” and “thank you” to ChatGPT cost money?

Yes — these extra words increase token count, which affects billing, especially in large-scale or API use.

How can I optimize my prompts?

Be clear, concise, and specific. Avoid filler language, break down complex instructions, and use tools like tokenizers.

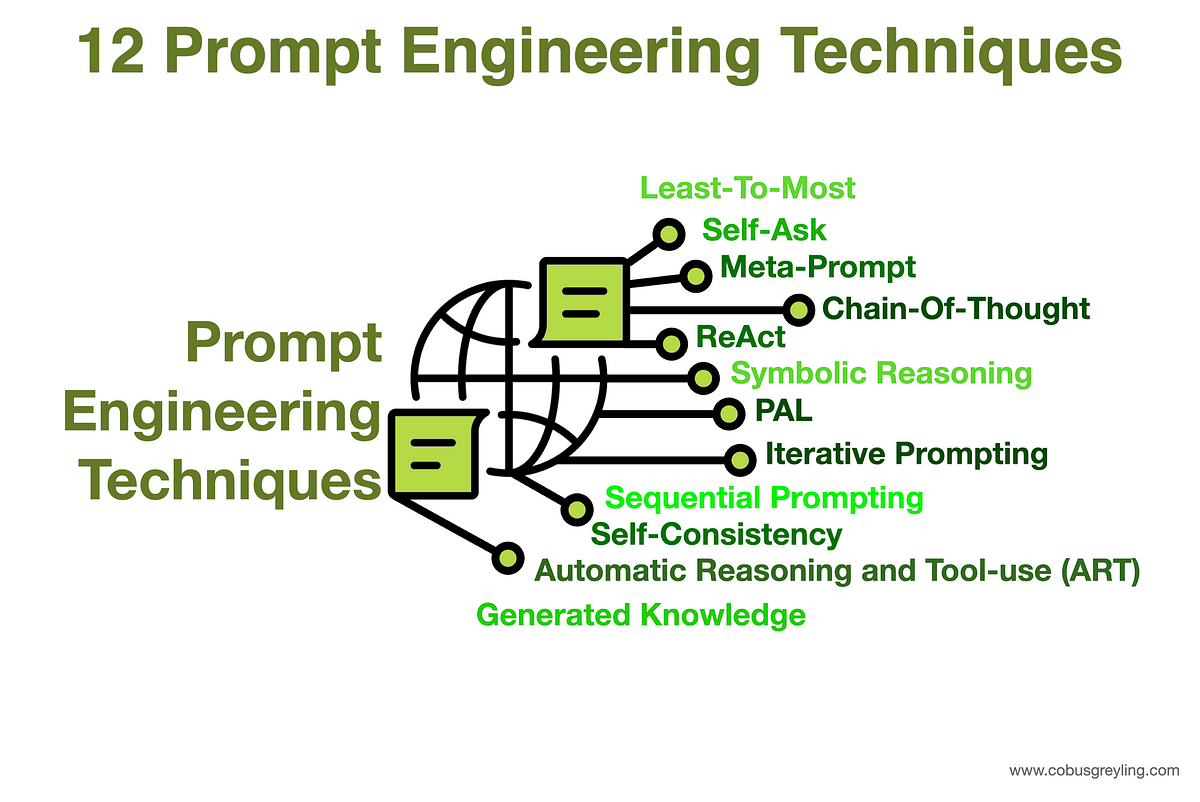

Is prompt engineering the same as prompt optimization?

Prompt engineering is broader (includes creative strategies), while optimization focuses on efficiency and cost-effectiveness.